安装准备

- k8s集群:最好3节点,单节点也可以。

[root@k8s-master01 charts]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 Ready master 359d v1.18.15 192.168.3.180 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.5

k8s-master02 Ready master 359d v1.18.15 192.168.3.181 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.5

k8s-master03 Ready master 359d v1.18.15 192.168.3.182 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.5

k8s-node01 Ready node 357d v1.18.15 192.168.3.183 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.5

k8s-node02 Ready node 357d v1.18.15 192.168.3.184 <none> CentOS Linux 7 (Core) 3.10.0-1127.el7.x86_64 docker://19.3.55

- k8s集群已安装helm和nginx-ingress

[root@k8s-master01 ~]# helm version

version.BuildInfo{Version:"v3.5.0", GitCommit:"32c22239423b3b4ba6706d450bd044baffdcf9e6", GitTreeState:"clean", GoVersion:"go1.15.6"}

[root@k8s-master01 ~]# helm list|grep nginx

ingress-nginx default 1 2021-02-02 19:35:03.810016289 +0800 CST deployed ingress-nginx-3.22.0 0.43.0

[root@k8s-master01 ~]# kubectl get svc|grep nginx

ingress-nginx-controller ClusterIP 10.110.65.147 <none> 80/TCP,443/TCP 359d

ingress-nginx-controller-admission ClusterIP 10.106.137.172 <none> 443/TCP 359

- k8s集群已安装存储类,并设置为默认存储

[root@k8s-master01 ~]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 359d

这里我们选择用helm方式安装,我们选择的chart地址:https://prometheus-community.github.io/helm-charts/kube-prometheus-stack , 这个chart默认在 https://prometheus-community.github.io/helm-charts 上。

helm安装prometheus

- 配置helm仓库

[root@k8s-master01 ~]# helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

- helm安装prometheus

时下最新的版本是30.1.0,该版本默认安装有坑,暂时不考虑,我们这里我们选择安装版本13.4.1

[root@k8s-master01 ~]# kubectl create ns monitor

[root@k8s-master01 ~]# helm install prometheus prometheus-community/kube-prometheus-stack --version=13.4.1 -n monitor

NAME: prometheus

LAST DEPLOYED: Fri Jan 28 07:49:38 2022

NAMESPACE: monitor

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace monitor get pods -l "release=prometheus"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

[root@k8s-master01 charts]#

通过上述命令,将以默认的配置在kubernetes中部署prometheus。默认情况下,chart会安装alertmamanger、grafana、prometheus、prometheus-operator、metrics这5个pod,另外会根据k8s集群节点数安装等数量的node-exporter。

确认安装状态

- 查看创建的pod

[root@k8s-master01 ~]# kubectl get po -n monitor

NAME READY STATUS RESTARTS AGE

alertmanager-prometheus-kube-prometheus-alertmanager-0 2/2 Running 0 6m12s

prometheus-grafana-7d988fdbfd-rxkgp 2/2 Running 0 6m25s

prometheus-kube-prometheus-operator-66c48678d6-fb7kv 1/1 Running 0 6m25s

prometheus-kube-state-metrics-c65b87574-848nt 1/1 Running 0 6m25s

prometheus-prometheus-kube-prometheus-prometheus-0 2/2 Running 0 6m12s

prometheus-prometheus-node-exporter-ltpm9 1/1 Running 0 6m25s

prometheus-prometheus-node-exporter-m5dsw 1/1 Running 0 6m25s

prometheus-prometheus-node-exporter-pl95j 1/1 Running 0 6m25s

prometheus-prometheus-node-exporter-qfflh 1/1 Running 0 6m25s

prometheus-prometheus-node-exporter-s7hx4 1/1 Running 0 6m25s

- 查看创建的svc

[root@k8s-master01 ~]# kubectl get svc -n monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 13m

prometheus-grafana ClusterIP 10.105.120.240 <none> 80/TCP 13m

prometheus-kube-prometheus-alertmanager ClusterIP 10.102.236.253 <none> 9093/TCP 13m

prometheus-kube-prometheus-operator ClusterIP 10.106.0.53 <none> 443/TCP 13m

prometheus-kube-prometheus-prometheus ClusterIP 10.109.139.214 <none> 9090/TCP 13m

prometheus-kube-state-metrics ClusterIP 10.97.203.125 <none> 8080/TCP 13m

prometheus-operated ClusterIP None <none> 9090/TCP 13m

prometheus-prometheus-node-exporter ClusterIP 10.106.174.214 <none> 9100/TCP 13m

- 查看创建的pv,pvc

[root@k8s-master01 ~]# kubectl get pv,pvc -n monitor

No resources found in monitor namespace.

- 查看创建的ingress

[root@k8s-master01 ~]# kubectl get ingress -n monitor

No resources found in monitor namespace.

启用ingress和持久化存储

默认安装情况下,该chart并没有对外开放访问接口,且没有启用持久化存储。我们需要设置一些参数,以启用ingress和持久化存储。

helm install prometheus prometheus-community/kube-prometheus-stack --version=13.4.1 \

--set alertmanager.ingress.enabled=true \

--set alertmanager.ingress.hosts[0]=alertmanager.k8s.com \

--set alertmanager.ingress.paths[0]=/ \

--set alertmanager.alertmanagerSpec.storage.volumeClaimTemplate.spec.resources.requests.storage=5Gi \

--set grafana.ingress.enabled=true \

--set grafana.ingress.hosts[0]=grafana.k8s.com \

--set grafana.ingress.paths[0]=/ \

--set prometheus.ingress.enabled=true \

--set prometheus.ingress.hosts[0]=prometheus.k8s.com \

--set prometheus.ingress.paths[0]=/ \

--set prometheus.prometheusSpec.storageSpec.volumeClaimTemplate.spec.resources.requests.storage=5Gi \

-n monitor

- 查看创建的pv,pvc

[root@k8s-master01 ~]# kubectl get pv,pvc -n monitor

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-5af2ea16-f8ec-4a8b-afc5-4f3dfe5787f4 5Gi RWO Delete Bound monitor/alertmanager-prometheus-kube-prometheus-alertmanager-db-alertmanager-prometheus-kube-prometheus-alertmanager-0 local-path 21m

persistentvolume/pvc-725f81b5-13ae-4f81-902d-9fccf6532663 5Gi RWO Delete Bound monitor/prometheus-prometheus-kube-prometheus-prometheus-db-prometheus-prometheus-kube-prometheus-prometheus-0 local-path 3m29s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/alertmanager-prometheus-kube-prometheus-alertmanager-db-alertmanager-prometheus-kube-prometheus-alertmanager-0 Bound pvc-5af2ea16-f8ec-4a8b-afc5-4f3dfe5787f4 5Gi RWO local-path 22m

persistentvolumeclaim/prometheus-prometheus-kube-prometheus-prometheus-db-prometheus-prometheus-kube-prometheus-prometheus-0 Bound pvc-725f81b5-13ae-4f81-902d-9fccf6532663 5Gi RWO local-path 4m11s

- 查看创建的ingress

[root@k8s-master01 ~]# kubectl get ingress -n monitor

NAME CLASS HOSTS ADDRESS PORTS AGE

prometheus-grafana <none> grafana.k8s.com 10.110.65.147 80 5m13s

prometheus-kube-prometheus-alertmanager <none> alertmanager.k8s.com 10.110.65.147 80 5m13s

prometheus-kube-prometheus-prometheus <none> prometheus.k8s.com 10.110.65.147 80 5m13s

该chart也支持其他设置,更多配置信息可以通过以下命令查看

helm show values prometheus-community/kube-prometheus-stack --version=13.4.1

访问prometheus相关系统

- ip域名映射处理

在访问prometheus、grafana和alertmanage系统之前,我们需要在使用客户端访问电脑建立ip和相关域名的映射关系如下:

192.168.3.210 grafana.k8s.com alertmanager.k8s.com prometheus.k8s.com

其中192.168.3.210这个IP可以通过下面这条命令获得

[root@k8s-master01 ~]# kubectl get configmap cluster-info -n kube-public -o yaml|grep server

server: https://192.168.3.210:6444

提示:win10或win7电脑中维护ip和相关域名的文件位置在 C:\Windows\System32\drivers\etc\hosts。

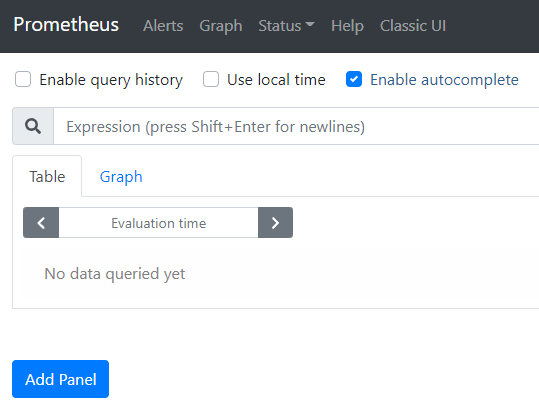

访问prometheus

访问grafana

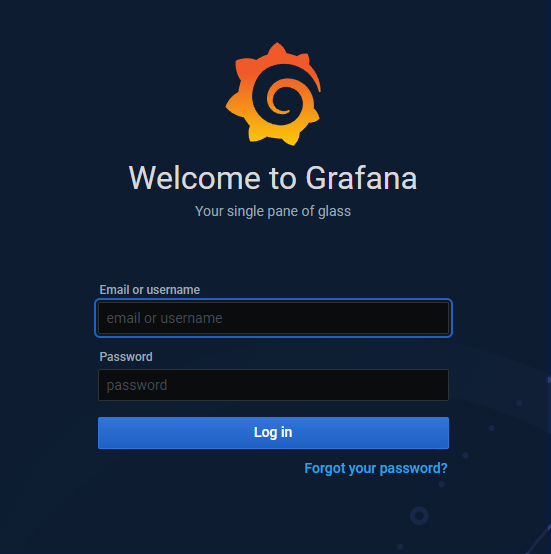

访问 http://grafana.k8s.com

默认用户名admin,密码是prom-operator,其中密码也可以通过以下命令获得

[root@k8s-master01 ~]# kubectl get secrets -n monitor prometheus-grafana -o yaml|grep admin-password|grep -v '{}'|awk '{print $2}'|base64 -d

prom-operator[root@k8s-master01 ~]#

输入用户名和密码,登录后进入首页如下

从首页我们点击左边栏的Dashboards按钮

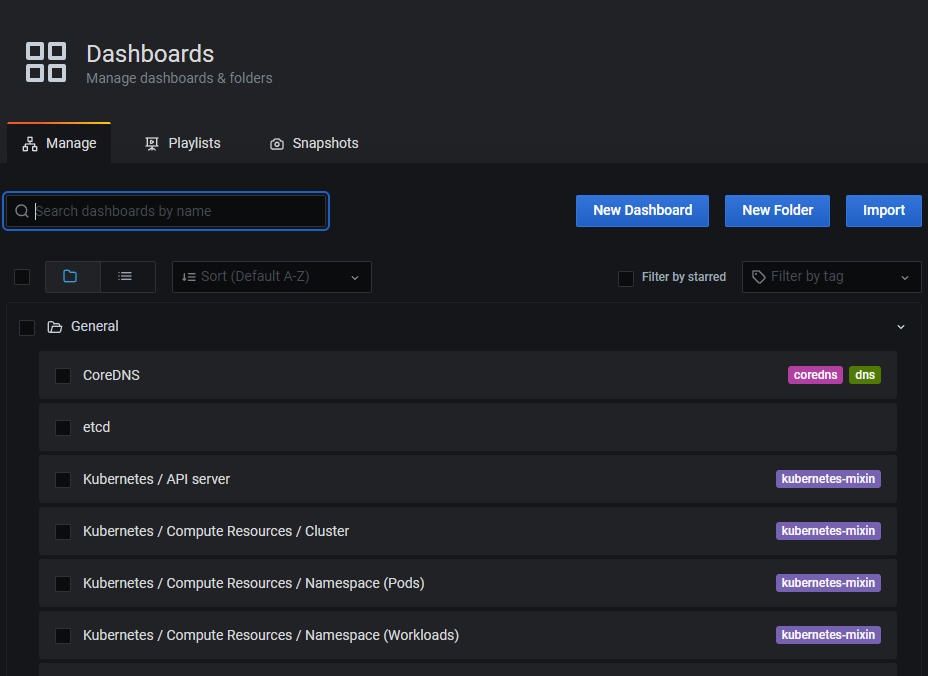

显示页面如下

点开上图“Default”文件夹,我们可以看到已经内置了很多面板如下,感兴趣的可以点开看看

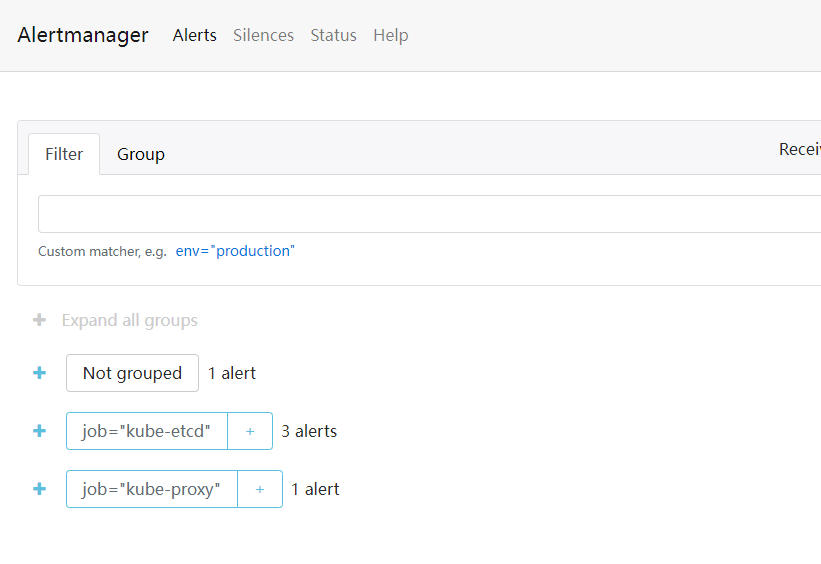

访问alertmanager

访问 http://alertmanager.k8s.com

总结

到这里,我们的监控prometheus系统就算是部署成功了,k8s集群部署了prometheus监控,就像给我们开发者安装了火眼金睛,从此k8s集群所有的服务器节点状态、所有的CPU、内存、存储资源、网络使用情况全都可以一览无遗;另外,我们除了可以实时监控所有服务器节点运行的pod、job状态,还能够基于prometheus进行扩展,实现更多的监控场景。

如何正确使用k8s集群,让我们先从安装监控系统prometheus开始。

评论区