背景

kubeasz是一个利用ansible-playbook基于二进制方式自动化部署和运维k8s集群的开源项目,目前该项目最新发布版本为3.4.1,基于该版本我们可以快速实现部署最高版本为1.25.3的k8s集群。

安装规划

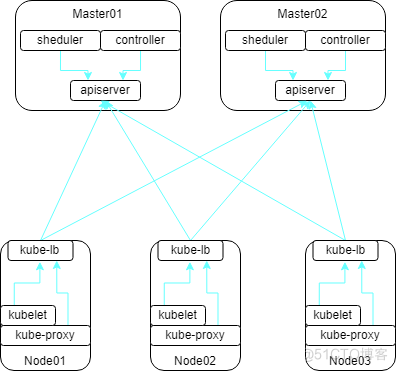

K8s HA-architecture

基于kubeasz安装的k8s高可用架构方案如上。

使用kubeasz默认安装的kubernetes集群主要特性如下

- etcd集群独立于kubernetes集群

- kubernetes三大组件均二进制运行

- 各节点服务器都经过基本的性能基线设置,能满足常规的使用场景

- 使用证书有效期为100年

- 集群通过kube-lb组件实现内部高可用

- 集成安装了kubernetes-dashboard,开箱即用

服务器配置信息

| 服务器 | cpu | memory | disk | os |

|---|---|---|---|---|

| 10.0.1.121 | 3c | 8g | 40g | CentOS Linux 7.9 |

| 10.0.1.122 | 3c | 8g | 40g | CentOS Linux 7.9 |

| 10.0.1.123 | 3c | 8g | 40g | CentOS Linux 7.9 |

高可用集群所需节点配置如下

| 角色 | 服务器 | 描述 |

|---|---|---|

| 部署节点 | 10.0.1.121 | 作为宿主机通过kubeasz容器运行ansible/ezctl命令 |

| etcd节点 | 10.0.1.121 10.0.1.122 10.0.1.123 |

注意etcd集群需要1,3,5,…奇数个节点,本实战安装1个节点 |

| master节点 | 10.0.1.121 10.0.1.122 |

高可用集群至少2个master节点,本实战安装1个节点 |

| node节点 | 10.0.1.123 | 运行应用负载的节点,节点数任意,本实战安装1个节点 |

本次部署将以10.0.1.121作为宿主机通过kubeasz容器在线安装k8s集群,其中kubeasz使用即时的最新版本3.3.1。部署的k8s集群版本信息如下:

- k8s: v1.25.3

- etcd: v3.5.4

- containerd: 1.6.8

- flanal: v0.19.2

- dashboard: v2.6.1

安装部署

以下所有操作在部署节点10.0.1.121完成。

准备环境

准备脚本、二进制文件和镜像文件

# 下载工具脚本ezdown,使用kubeasz版本3.4.1

export release=3.4.1

wget https://github.com/easzlab/kubeasz/releases/download/${release}/ezdown

chmod +x ./ezdown

# 使用工具脚本下载

./ezdown -D

./ezdown -D命令多执行几遍,直至再执行的时候提示”Action successed: download_all“为止。这样就在/etc/kubeasz目录下下载了在线安装所有需要的脚本、二进制文件和镜像文件。

另外,通过执行./ezdown -D我们会发现docker环境也在主控宿主机安装好了,这个效果即使在做非k8集群本地化交付场景的时候也特别有用。

[root@k8s-master-121 ~]# docker version

Client:

Version: 20.10.18

API version: 1.41

Go version: go1.18.6

Git commit: b40c2f6

Built: Thu Sep 8 23:05:51 2022

OS/Arch: linux/amd64

Context: default

Experimental: true

Server: Docker Engine - Community

Engine:

Version: 20.10.18

API version: 1.41 (minimum version 1.12)

Go version: go1.18.6

Git commit: e42327a

Built: Thu Sep 8 23:11:23 2022

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: v1.6.8

GitCommit: 9cd3357b7fd7218e4aec3eae239db1f68a5a6ec6

runc:

Version: 1.1.4

GitCommit: v1.1.4-0-g5fd4c4d1

docker-init:

Version: 0.19.0

GitCommit: de40ad0

继续执行另外两个命令./ezdown -X和./ezdown -P分别把额外容器镜像和离线文件也下载下来,这些文件也都在/etc/kubeasz。后面我们把/etc/kubeasz这个目录打个压缩包,并和ezdown文件归档在一起后续可以直接使用。

设置ssh免密登录部署服务器

# 主控机设置密钥

ssh-keygen

# 设置免密登录

ssh-copy-id 10.0.1.121

ssh-copy-id 10.0.1.122

ssh-copy-id 10.0.1.123

启动kubeasz容器

[root@k8s-master-121 ~]# ./ezdown -S

2022-10-24 09:14:50 INFO Action begin: start_kubeasz_docker

2022-10-24 09:14:50 INFO try to run kubeasz in a container

2022-10-24 09:14:50 DEBUG get host IP: 10.0.1.121

0e0a6aec80e4bc9a25c220b1bea57115daa0666e2cf6100e3bdf4aedfe537a89

2022-10-24 09:14:51 INFO Action successed: start_kubeasz_docker

进入kubeasz创建集群k8s-01

[root@k8s-master-121 ~]# docker exec -it kubeasz bash

bash-5.1# ezctl new k8s-01

2022-10-24 01:15:39 DEBUG generate custom cluster files in /etc/kubeasz/clusters/k8s-01

2022-10-24 01:15:39 DEBUG set versions

2022-10-24 01:15:39 DEBUG cluster k8s-01: files successfully created.

2022-10-24 01:15:39 INFO next steps 1: to config '/etc/kubeasz/clusters/k8s-01/hosts'

2022-10-24 01:15:39 INFO next steps 2: to config '/etc/kubeasz/clusters/k8s-01/config.yml'

根据提示修改hosts如下,其中hosts文件中按规划调整了etcd、kube_master、kube_node和ex_lb四处位置的服务器IP,注意这里只能使用IP,不能使用hostname;另外CONTAINER_RUNTIME应该设置为containerd,其它配置可保持不变。本实践中的网络组件选择了flannel,NODE_PORT_RANGE调整为"30-32767"

注意:

- 生成的hosts文件里的CONTAINER_RUNTIME一定不能是docker,这个不要调整错了。

# 'etcd' cluster should have odd member(s) (1,3,5,...)

[etcd]

10.0.1.121

10.0.1.122

10.0.1.123

# master node(s)

[kube_master]

10.0.1.121

10.0.1.122

# work node(s)

[kube_node]

10.0.1.123

# [optional] harbor server, a private docker registry

# 'NEW_INSTALL': 'true' to install a harbor server; 'false' to integrate with existed one

[harbor]

#192.168.1.8 NEW_INSTALL=false

# [optional] loadbalance for accessing k8s from outside

[ex_lb]

10.0.1.121 LB_ROLE=backup EX_APISERVER_VIP=10.0.1.120 EX_APISERVER_PORT=8443

10.0.1.122 LB_ROLE=master EX_APISERVER_VIP=10.0.1.120 EX_APISERVER_PORT=8443

10.0.1.123 LB_ROLE=master EX_APISERVER_VIP=10.0.1.120 EX_APISERVER_PORT=8443

# [optional] ntp server for the cluster

[chrony]

#192.168.1.1

[all:vars]

# --------- Main Variables ---------------

# Secure port for apiservers

SECURE_PORT="6443"

# Cluster container-runtime supported: docker, containerd

# if k8s version >= 1.24, docker is not supported

CONTAINER_RUNTIME="containerd"

# Network plugins supported: calico, flannel, kube-router, cilium, kube-ovn

CLUSTER_NETWORK="flannel"

# Service proxy mode of kube-proxy: 'iptables' or 'ipvs'

PROXY_MODE="ipvs"

# K8S Service CIDR, not overlap with node(host) networking

SERVICE_CIDR="10.68.0.0/16"

# Cluster CIDR (Pod CIDR), not overlap with node(host) networking

CLUSTER_CIDR="172.20.0.0/16"

# NodePort Range

NODE_PORT_RANGE="30-32767"

# Cluster DNS Domain

CLUSTER_DNS_DOMAIN="cluster.local"

# -------- Additional Variables (don't change the default value right now) ---

# Binaries Directory

bin_dir="/opt/kube/bin"

# Deploy Directory (kubeasz workspace)

base_dir="/etc/kubeasz"

# Directory for a specific cluster

cluster_dir="{{ base_dir }}/clusters/k8s-01"

# CA and other components cert/key Directory

ca_dir="/etc/kubernetes/ssl"

开始安装

docker exec -it kubeasz bash

# 一键安装,等价于执行docker exec -it kubeasz ezctl setup k8s-01 all

bash-5.1# ezctl setup k8s-01 all

# 分步安装 需要分别执行01-07的yml

# 01-创建证书和环境准备

bash-5.1# ezctl setup k8s-01 01

# 02-安装etcd集群

bash-5.1# ezctl setup k8s-01 02

# 03-安装容器运行时(docker or containerd)

bash-5.1# ezctl setup k8s-01 03

# 04-安装kube_master节点

bash-5.1# ezctl setup k8s-01 04

# 05-安装kube_node节点

bash-5.1# ezctl setup k8s-01 05

# 06-安装网络组件

bash-5.1# ezctl setup k8s-01 06

# 07-安装集群主要插件

bash-5.1# ezctl setup k8s-01 07

执行一键安装,静候安装完成,显示如下就是安装好了。也可以分步安装。按照kubeasz对安装kubernetes集群的步骤拆解,一共分为7步,每一步都对应相应的安装任务,读者可以自行体验。

PLAY RECAP *********************************************************************************************************************************************************************************

10.0.1.121 : ok=108 changed=98 unreachable=0 failed=0 skipped=170 rescued=0 ignored=1

10.0.1.122 : ok=101 changed=93 unreachable=0 failed=0 skipped=146 rescued=0 ignored=1

10.0.1.123 : ok=93 changed=86 unreachable=0 failed=0 skipped=171 rescued=0 ignored=1

localhost : ok=33 changed=29 unreachable=0 failed=0 skipped=11 rescued=0 ignored=0

验证安装

登录Master10.0.1.121,我们确认下是否安装成功。

# 可以看到各节点就绪 (Ready) 状态、角色、运行时间以及版本号

[root@k8s-master-121 ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

10.0.1.121 NotReady,SchedulingDisabled master 6m56s v1.25.3 10.0.1.121 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.8

10.0.1.122 NotReady,SchedulingDisabled master 6m55s v1.25.3 10.0.1.122 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.8

10.0.1.123 NotReady node 6m12s v1.25.3 10.0.1.123 <none> CentOS Linux 7 (Core) 3.10.0-1160.el7.x86_64 containerd://1.6.8

# 可以看到scheduler/controller-manager/etcd等组件 Healthy

[root@k8s-master-121 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

controller-manager Healthy ok

etcd-1 Healthy {"health":"true","reason":""}

etcd-2 Healthy {"health":"true","reason":""}

# 可以看到kubernetes master(apiserver)组件 running

[root@k8s-master-121 ~]# kubectl cluster-info

Kubernetes control plane is running at https://10.0.1.121:6443

CoreDNS is running at https://10.0.1.121:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

KubeDNSUpstream is running at https://10.0.1.121:6443/api/v1/namespaces/kube-system/services/kube-dns-upstream:dns/proxy

kubernetes-dashboard is running at https://10.0.1.121:6443/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# 可以查看所有集群pod状态,默认已安装网络插件flannel、coredns、metrics-server等

[root@k8s-master-121 ~]# kubectl get po --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6994896589-l2d25 0/1 Pending 0 7m47s

kube-system dashboard-metrics-scraper-86cbf5f9dd-bbm2p 0/1 Pending 0 7m43s

kube-system kube-flannel-ds-5dqmc 0/1 Init:0/2 0 9m55s

kube-system kube-flannel-ds-6nq4m 0/1 Init:0/2 0 9m55s

kube-system kube-flannel-ds-jrs5c 0/1 Init:0/2 0 9m55s

kube-system kubernetes-dashboard-55cbd7c65d-nslcf 0/1 Pending 0 7m43s

kube-system metrics-server-846ddc9f47-v9bgt 0/1 Pending 0 7m45s

kube-system node-local-dns-d8n7r 0/1 ContainerCreating 0 7m46s

kube-system node-local-dns-mkjl9 0/1 ContainerCreating 0 7m46s

kube-system node-local-dns-ph65z 0/1 ContainerCreating 0 7m46s

发现上面的pod不是Pending、Init就是ContainerCreating,显然有问题,可以参考如下方案解决

rpm -e libseccomp-devel-2.3.1-4.el7.x86_64 --nodeps

rpm -ivh libseccomp-2.4.1-0.el7.x86_64.rpm

systemctl restart containerd

libseccomp-2.4.1-0.el7.x86_64.rpm传送门在这里。

# 可以查看所有集群svc状态

[root@k8s-master-121 ~]# kubectl get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.68.0.1 <none> 443/TCP 12m

kube-system dashboard-metrics-scraper ClusterIP 10.68.74.14 <none> 8000/TCP 8m34s

kube-system kube-dns ClusterIP 10.68.0.2 <none> 53/UDP,53/TCP,9153/TCP 8m38s

kube-system kube-dns-upstream ClusterIP 10.68.46.118 <none> 53/UDP,53/TCP 8m37s

kube-system kubernetes-dashboard NodePort 10.68.8.225 <none> 443:6546/TCP 8m34s

kube-system metrics-server ClusterIP 10.68.197.207 <none> 443/TCP 8m36s

kube-system node-local-dns ClusterIP None <none> 9253/TCP 8m37s

至此,我们的k8s集群安装验证成功。

访问kubernetes-dashboard

在验证安装过程查看所有集群svc状态的操作中,我们看到了默认安装了kubernetes-dashboard,我们可以通过NodePort访问https://{IP}可为任一节点IP。

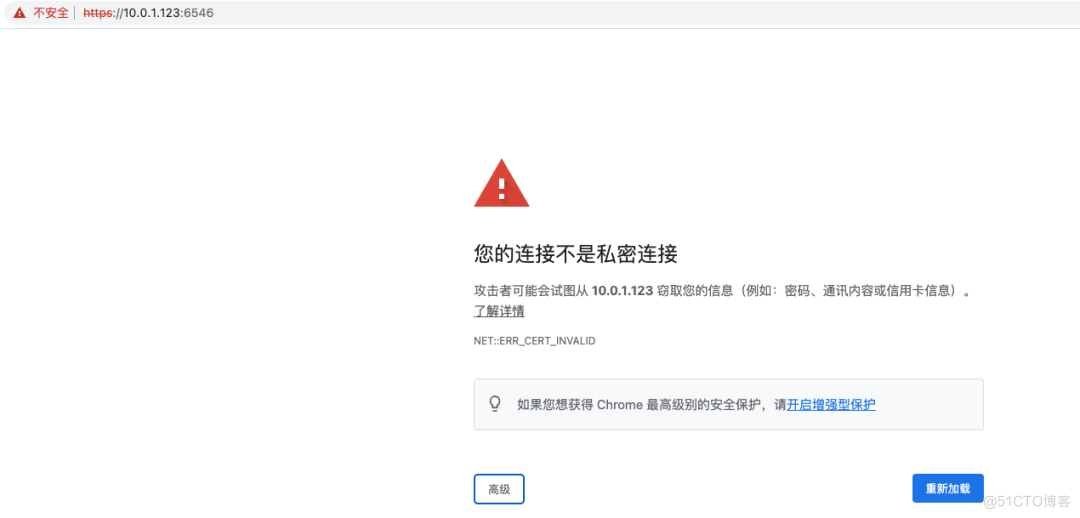

这里我们使用chrome浏览器访问,显示如下

随便点击页面的空白处,然后输入:thisisunsafe

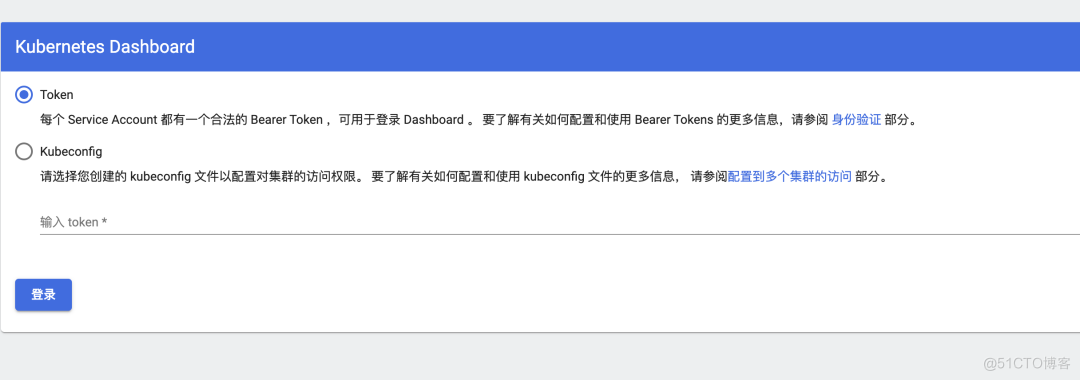

页面正常打开如下

从master节点查看token并使用token登录(这里为了方便,我们可以直接使用admin-user的token)

[root@k8s-master-121 ~]# kubectl get po --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6994896589-l2d25 1/1 Running 0 40m

kube-system dashboard-metrics-scraper-86cbf5f9dd-bbm2p 1/1 Running 0 40m

kube-system kube-flannel-ds-5dqmc 1/1 Running 0 42m

kube-system kube-flannel-ds-6nq4m 1/1 Running 0 42m

kube-system kube-flannel-ds-jrs5c 1/1 Running 0 42m

kube-system kubernetes-dashboard-55cbd7c65d-nslcf 1/1 Running 0 40m

kube-system metrics-server-846ddc9f47-v9bgt 1/1 Running 0 40m

kube-system node-local-dns-d8n7r 1/1 Running 0 40m

kube-system node-local-dns-mkjl9 1/1 Running 0 40m

kube-system node-local-dns-ph65z 1/1 Running 0 40m

[root@k8s-master-121 ~]# kubectl get secret -n kube-system

NAME TYPE DATA AGE

admin-user kubernetes.io/service-account-token 3 43m

dashboard-read-user kubernetes.io/service-account-token 3 43m

kubernetes-dashboard-certs Opaque 0 43m

kubernetes-dashboard-csrf Opaque 1 43m

kubernetes-dashboard-key-holder Opaque 2 43m

[root@k8s-master-121 ~]# kubectl describe secret -n kube-system admin-user

Name: admin-user

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: c7ecc9a3-df56-435d-b69d-b40cd6b59742

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IjNlWS1RbWRKWWxjamV1SzlVcTRnS1JYYUVBandIb1BFMGFrRVJUcEV2ZEUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJjN2VjYzlhMy1kZjU2LTQzNWQtYjY5ZC1iNDBjZDZiNTk3NDIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.eY8_bXkFO_85n7SmjZ5C_ou_JkytIFOwe58MeywEnbQZ1k-gSGb65ow_6ocpX7Oxf3Jd2_Y2uHlBYKCsRci_mqx6ne9RFDxoxDMiWqPYa3uSxdv2OJ-noFa8SOwgDB0Fs4YqA5yNocc9VxSxfim7KDUgRCHAq0Kd6-qOpapv3zvJ5Bzi70Q8eWmMgr4yW_8O6bvLEzhevgMmn7UivZQAB9aDoUhsWX7VC8mgQcU6ABy86fDMZPs3HP_-n0zgKSqlyBA7dklATyj5AQgU_BlnVL5ZEfqQGAg8QrD_waQo5bSDNJau3dKbDR6x9KiaTiPLqniC1D1YDvXZVmJ18Esvtw

ca.crt: 1302 bytes

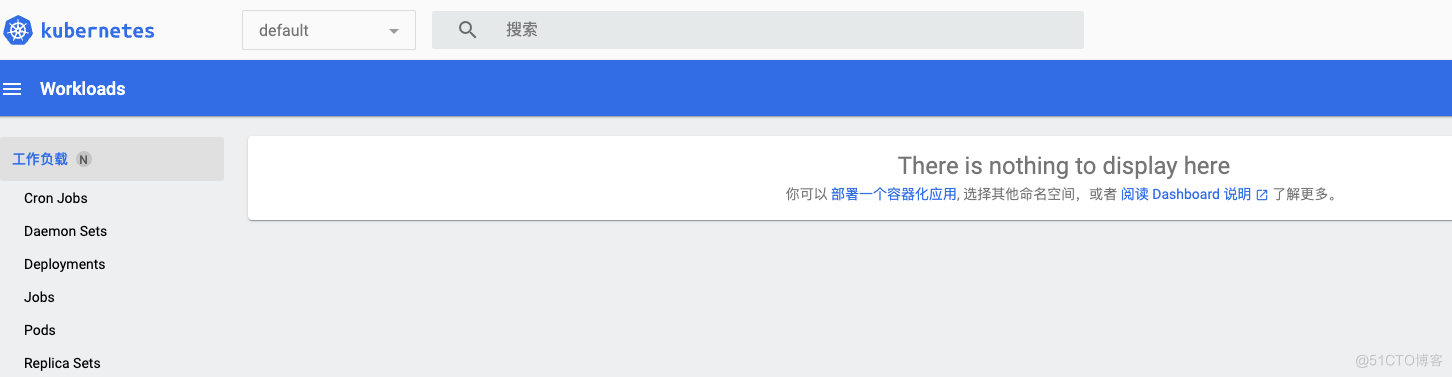

使用admin-user的token登录kubernetes-dashboard,进入后显示如下

恢复 Master 调度

默认情况下,pod 节点不会分配到 master 节点,可以通过如下命令让 master 节点恢复调度,这样后续master也可以运行pod了。

[root@k8s-master-121 ~]# kubectl uncordon 10.0.1.121 10.0.1.122

node/10.0.1.121 uncordoned

node/10.0.1.122 uncordoned

[root@k8s-master-121 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

10.0.1.121 Ready master 49m v1.25.3

10.0.1.122 Ready master 49m v1.25.3

10.0.1.123 Ready node 48m v1.25.3

kubeasz内置了很多常用组件,包括helm、nginx-ingress、prometheus等等,读者可以自行探索,这里笔者没有用kubeasz的内置模块,而是自行安装了helm、nginx-ingress和存储(local-path)。具体安装过程记录如下

安装 helm

[root@k8s-master-121 ~]# wget https://get.helm.sh/helm-v3.10.1-linux-amd64.tar.gz

[root@k8s-master-121 ~]# tar -zxvf helm-v3.10.1-linux-amd64.tar.gz

linux-amd64/

linux-amd64/helm

linux-amd64/LICENSE

linux-amd64/README.md

[root@k8s-master-121 ~]# cp linux-amd64/helm /usr/bin

[root@k8s-master-121 ~]# helm version

version.BuildInfo{Version:"v3.10.1", GitCommit:"9f88ccb6aee40b9a0535fcc7efea6055e1ef72c9", GitTreeState:"clean", GoVersion:"go1.18.7"}

安装 nginx-ingress

- 下载 ingress

[root@k8s-master-121 ~]# helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

"ingress-nginx" has been added to your repositories

[root@k8s-master-121 ~]# helm pull ingress-nginx/ingress-nginx --version=4.2.3

[root@k8s-master-121 ~]# tar -zxvf ingress-nginx-4.2.3.tgz

- 修改ingress-nginx的values.yaml

调整细节如下

# 修改controller

# 修改registry为registry-1.docker.io

registry: registry-1.docker.io

# 修改镜像位置

image: jiamiao442/ingress-nginx-controller

# 注释掉 digest

# digest: sha256:35fe394c82164efa8f47f3ed0be981b3f23da77175bbb8268a9ae438851c8324

# 修改patch镜像

# 修改registry为registry-1.docker.io

registry: registry-1.docker.io

# 修改镜像位置

image: jiamiao442/kube-webhook-certgen

# 注释掉 digest

# digest: sha256:549e71a6ca248c5abd51cdb73dbc3083df62cf92ed5e6147c780e30f7e007a47

# 修改defaultbackend镜像

# 修改registry为registry-1.docker.io

registry: registry-1.docker.io

# 修改镜像位置

image: mirrorgooglecontainers/defaultbackend-amd64

# dnsPolicy

dnsPolicy: ClusterFirstWithHostNet

# 使用DaemonSet,将ingress部署在指定节点上

kind: DaemonSet

# 使用hostNetwork为true

hostNetwork: true

# 修改type,改为ClusterIP

type: ClusterIP

# 默认启动了一个8443端口的服务,这个和ex-lb有冲突,我们可以先把它调整成28443

port: 28443

- 安装

[root@k8s-master-121 ingress-nginx]# helm install ingress-nginx ./

NAME: ingress-nginx

LAST DEPLOYED: Mon Oct 24 14:50:10 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export POD_NAME=$(kubectl --namespace default get pods -o jsonpath="{.items[0].metadata.name}" -l "app=ingress-nginx,component=controller,release=ingress-nginx")

kubectl --namespace default port-forward $POD_NAME 8080:80

echo "Visit http://127.0.0.1:8080 to access your application."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- pathType: Prefix

backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

- 确认 nginx- ingress 安装是否 OK。

[root@k8s-master-121 ingress-nginx]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller ClusterIP 10.68.146.139 <none> 80/TCP,443/TCP 75s

ingress-nginx-controller-admission ClusterIP 10.68.35.155 <none> 443/TCP 75s

kubernetes ClusterIP 10.68.0.1 <none> 443/TCP 3h58m

**用浏览器访问 ******http://10.0.1.121/,界面如下:

分别访问http://10.0.1.122/和http://10.0.1.123, 均可确认nginx-ingress已经正常安装

启用ex-lb

kubeasz集成了ex-lb,ex-lb集成了keepalived服务,这样就可以用虚拟IP来访问物理IP了,如笔者在实验中的3个节点10.0.1.121、10.0.1.122和10.0.1.123上均配置了ex-lb,虚拟IP是10.0.1.120

[root@k8s-master-121 ingress-nginx]# docker exec -it kubeasz bash

# 安装ex-lb

bash-5.1# ezctl setup k8s-01 10

安装完成后,就可以用浏览器访问 http://10.0.1.120/,界面如下:

安装存储 local-path

k8s集群默认没有配置存储组件,只能使用hostPath,我们可以安装至少一个存储组件以保证支撑某些场景,这里笔者选择了local-path-provisioner。

- 下载

[root@k8s-master-121 ~]# wget https://github.com/rancher/local-path-provisioner/archive/v0.0.22.tar.gz

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl apply -f deploy/local-path-storage.yaml

- 安装

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl apply -f deploy/local-path-storage.yaml

namespace/local-path-storage created

serviceaccount/local-path-provisioner-service-account created

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

deployment.apps/local-path-provisioner created

storageclass.storage.k8s.io/local-path created

configmap/local-path-config created

- 配置 local-path 为默认存储

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/local-path patched

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.beta.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/local-path patched

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 2m29s

- 验证 local-path 安装是否 OK

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl apply -f examples/pod/pod.yaml,examples/pvc/pvc.yaml

pod/volume-test created

persistentvolumeclaim/local-path-pvc created

# 还没有创建好

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/local-path-pvc Pending local-path 9s

# 创建成功

[root@k8s-master-121 local-path-provisioner-0.0.22]# kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/local-path-pvc Bound pvc-5181faf9-40a1-4970-8104-452c26f9af51 128Mi RWO local-path 43s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-5181faf9-40a1-4970-8104-452c26f9af51 128Mi RWO Delete Bound default/local-path-pvc local-path 33s

评论区