安装准备

- 虚拟机:centos7.8 3台

本实践中以3台已安装centos7.8的虚机作为实践环境组建高可用 k8s 集群,分别在每台虚机用docker版本的haproxy做负载均衡,用docker版本的keeplived做虚拟IP;其中docker 版本为 19.03.5,k8s 版本为 1.18.15,集群使用 kubeadm 进行安装。

具体安装规划如下:

| hostname | ip | os | kernel | cpu | memory | disk | role |

|---|---|---|---|---|---|---|---|

| k8s-master01 | 192.168.5.220 | centos7.8 | 3.10.0-1127.el7.x86_64 | 3c | 8g | 40g | master |

| k8s-master02 | 192.168.5.221 | centos7.8 | 3.10.0-1127.el7.x86_64 | 3c | 8g | 40g | master |

| k8s-master03 | 192.168.5.222 | centos7.8 | 3.10.0-1127.el7.x86_64 | 3c | 8g | 40g | master |

centos7.8系统优化

- ulimit调优

echo -ne "

* soft nofile 65536

* hard nofile 65536

" >>/etc/security/limits.conf

- 时区

cp -y /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

- 配置时间同步

使用chrony同步时间,centos7默认已安装,这里修改时钟源,所有节点与网络时钟源同步

# 注释默认ntp服务器

sed -i 's/^server/#&/' /etc/chrony.conf

# 指定上游公共 ntp 服务器

cat >> /etc/chrony.conf << EOF

server 0.asia.pool.ntp.org iburst

server 1.asia.pool.ntp.org iburst

server 2.asia.pool.ntp.org iburst

server 3.asia.pool.ntp.org iburst

EOF

# 重启chronyd服务并设为开机启动

systemctl enable chronyd && systemctl restart chronyd

- 中文环境

localectl set-locale LANG=zh_CN.utf8

- 关掉防火墙

systemctl stop firewalld && systemctl disable firewalld

- 关闭selinux

# 查看selinux状态

getenforce

# 临时关闭selinux

setenforce 0

# 永久关闭(需重启系统)

sed -i 's/^ *SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

- 禁用swap

# 临时禁用

swapoff -a

# 永久禁用(若需要重启后也生效,在禁用swap后还需修改配置文件/etc/fstab,注释swap)

sed -i.bak '/swap/s/^/#/' /etc/fstab

- 内核参数修改

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl --system

- 开启ipvs支持

yum -y install ipvsadm ipset

# 临时生效

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

# 永久生效

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

安装docker和 kubernetes

本实践我们安装的docker版本是19.03.5,kubernetes版本是1.18.15

- 安装所需插件

yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

- 使用阿里云源地址

yum-config-manager \

--add-repo \

http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

- 安装docker

yum install docker-ce-19.03.5 docker-ce-cli-19.03.5 containerd.io

- 安装 kubernetes

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 更新缓存

yum clean all

yum -y makecache

# 版本查看

yum list kubelet --showduplicates | sort -r

# 目前最新版是1.20.2-0,我们安装的是1.18.15

# 安装kubelet、kubeadm和kubectl

yum install -y kubelet-1.18.15 kubeadm-1.18.15 kubectl-1.18.15

- 修改cgroup Driver

修改daemon.json,新增‘"exec-opts": ["native.cgroupdriver=systemd"]’

- 设置自启动

systemctl daemon-reload && systemctl enable docker && systemctl enable kubelet

- 下载 kubernetes 对应的 images

一般直接下载 k8s 相关镜像会有网络问题,这里提供一种变通的方式,通过先从 aliyun上下载对应版本的相关镜像,下载完成后,再重新打 tag。

kubeadm config images list --kubernetes-version=v1.18.15

W0126 21:22:00.485045 24826 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

k8s.gcr.io/kube-apiserver:v1.18.15

k8s.gcr.io/kube-controller-manager:v1.18.15

k8s.gcr.io/kube-scheduler:v1.18.15

k8s.gcr.io/kube-proxy:v1.18.15

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.7

more image.sh

#!/bin/bash

url=registry.cn-hangzhou.aliyuncs.com/google_containers

version=$1

images=(`kubeadm config images list --kubernetes-version=$version|awk -F '/' '{print $2}'`)

for imagename in ${images[@]} ; do

docker pull $url/$imagename

docker tag $url/$imagename k8s.gcr.io/$imagename

docker rmi -f $url/$imagename

done

sh image.sh v1.18.15

安装启动haproxy和keepalived

- 编辑start-haproxy.sh文件

修改Kubernetes Master节点IP地址为实际Kubernetes集群所使用的值(Master Port默认为6443不用修改)

cat > /opt/lb/start-haproxy.sh << "EOF"

#!/bin/bash

MasterIP1=192.168.5.220

MasterIP2=192.168.5.221

MasterIP3=192.168.5.222

MasterPort=6443

docker run -d --restart=always --name HAProxy-K8S -p 6444:6444 \

-e MasterIP1=$MasterIP1 \

-e MasterIP2=$MasterIP2 \

-e MasterIP3=$MasterIP3 \

-e MasterPort=$MasterPort \

wise2c/haproxy-k8s

EOF

- 编辑start-keepalived.sh文件

修改虚拟IP地址VIRTUAL_IP、虚拟网卡设备名INTERFACE、虚拟网卡的子网掩码NETMASK_BIT、路由标识符RID、虚拟路由标识符VRID的值为实际Kubernetes集群所使用的值。(CHECK_PORT的值6444一般不用修改,它是HAProxy的暴露端口,内部指向Kubernetes Master Server的6443端口)

cat > /opt/lb/start-keepalived.sh << "EOF"

#!/bin/bash

VIRTUAL_IP=192.168.5.230 #vip注意必须是没用过的ip,设置时先ping一下看有没有人用过

INTERFACE=ens32 #ens32是实际的网卡地址,可以通过ip a命令查看

NETMASK_BIT=24

CHECK_PORT=6444

RID=10

VRID=160

MCAST_GROUP=224.0.0.18

docker run -itd --restart=always --name=Keepalived-K8S \

--net=host --cap-add=NET_ADMIN \

-e VIRTUAL_IP=$VIRTUAL_IP \

-e INTERFACE=$INTERFACE \

-e CHECK_PORT=$CHECK_PORT \

-e RID=$RID \

-e VRID=$VRID \

-e NETMASK_BIT=$NETMASK_BIT \

-e MCAST_GROUP=$MCAST_GROUP \

wise2c/keepalived-k8s

EOF

- 启动haproxy和keepalived

sh /opt/lb/start-keepalived.sh && sh /opt/lb/start-haproxy.sh

以上步骤在每一台虚拟机上都要执行,确保每一台虚拟机 docker、kuberletes 安装正常,并成功下载了 k8s 相关镜像,以及正常启动了haproxy和keepalived。

修改主机名

- 设置 hostname

# k8s-master01

hostnamectl set-hostname k8s-master01

# k8s-master02

hostnamectl set-hostname k8s-master02

# k8s-master03

hostnamectl set-hostname k8s-master03

- 修改/etc/hosts

cat >> /etc/hosts << EOF

192.168.5.220 k8s-master01

192.168.5.221 k8s-master02

192.168.5.222 k8s-master03

EOF

- 重启系统

reboot

组建 k8s 集群

初始化master节点(只在k8s-mater01主机上执行)

- 创建初始化配置文件,可以使用如下命令生成初始化配置文件

kubeadm config print init-defaults > kubeadm-config.yaml

vi kubeadm-config.yaml

- 编辑配置文件kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

# k8s-master01的ip:192.168.5.220

advertiseAddress: 192.168.5.220

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

# 配置 Keepalived 地址和 HAProxy 端口

controlPlaneEndpoint: "192.168.5.230:6444"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

# 修改版本号

kubernetesVersion: v1.18.15

networking:

dnsDomain: cluster.local

# 配置成 Calico 的默认网段

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

# 开启 IPVS 模式

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

- kubeadm 初始化

# kubeadm 初始化

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

# 配置 kubectl

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

# 验证是否成功

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 NotReady master 7m11s v1.18.15

# 注意这里返回的 node 是 NotReady 状态,因为没有装网络插件

kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get http://127.0.0.1:10252/healthz: dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get http://127.0.0.1:10251/healthz: dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"}

# 注意这里返回的 controller-manager和scheduler 是 Unhealthy 状态,下面是解决方案

- 解决controller-manager和scheduler 是 Unhealthy 状态

开启scheduler, control-manager的10251,10252端口

修改以下配置文件:

/etc/kubernetes/manifests/kube-scheduler.yaml,把port=0那行注释

/etc/kubernetes/manifests/kube-controller-manager.yaml,把port=0那行注释

# 重启kubelet

systemctl restart kubelet

kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

# 注意这里返回的 controller-manager和scheduler 已经是 healthy 状态了

- 安装calico网络组件

# 下载

wget https://docs.projectcalico.org/manifests/calico.yaml

# 替换 calico 部署文件的 IP 为 kubeadm 中的 networking.podSubnet 参数 10.244.0.0

sed -i 's/192.168.0.0/10.244.0.0/g' calico.yaml

# 安装

kubectl apply -f calico.yaml

kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 59m v1.18.15

# 注意这里返回的 node 已经是 Ready 状态了

- 加入 Master 节点((在k8s-mater01和k8s-mater02主机上执行))

# 以下为示例命令

kubeadm join 192.168.5.230:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:32102a6df7b4dd044ad461d4d84d165ed3f43c4ad06cc6bbe1b38e47d1d7ee7c \

--control-plane --certificate-key 67a5cdc70d254a96e2e41c2b0e54b5660b969c600c60a516ff357fa6a96e6a1d

- 查看 kubernetes 状态:

[root@k8s-master01 k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master01 Ready master 131m v1.18.15

k8s-master02 Ready master 69m v1.18.15

k8s-master03 Ready master 69m v1.18.15

- 让 Master 也进行 Pod 调度

默认情况下,pod 节点不会分配到 master 节点,可以通过如下命令让 master 节点也可以进行 pod 调度:

[root@k8s-master01 k8s]# kubectl taint node k8s-master01 k8s-master02 k8s-master03 node-role.kubernetes.io/master-

node/k8s-master01 untainted

node/k8s-master02 untainted

node/k8s-master03 untainted

- 取消 k8s 对外开放默认端口限制

本操作在k8s-mater01、k8s-mater02和k8s-mater03主机上均要执行

k8s 默认对外开放的端口范围为 30000~32767,可以通过修改 kube-apiserver.yaml 文件重新调整端口范围,在 /etc/kubernetes/manifests/kube-apiserver.yaml 文件中,找到 --service-cluster-ip-range 这一行,在下面增加如下内容,

- --service-node-port-range=10-65535

然后重启 kubelet 即可。

systemctl daemon-reload && systemctl restart kubelet

安装 helm3

cd /opt/k8s

wget https://get.helm.sh/helm-v3.5.0-linux-amd64.tar.gz

# 解压并放到 usr/bin 下

tar -zxvf helm-v3.5.0-linux-amd64.tar.gz

cp linux-amd64/helm /usr/bin

# 打印版本号

helm version

version.BuildInfo{Version:"v3.5.0", GitCommit:"32c22239423b3b4ba6706d450bd044baffdcf9e6", GitTreeState:"clean", GoVersion:"go1.15.6"}

安装 nginx-ingress

- 下载ingress

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

"ingress-nginx" has been added to your repositories

helm pull ingress-nginx/ingress-nginx

tar -zxf ingress-nginx-3.22.0.tgz && cd ingress-nginx

- 修改values.yaml

# 修改controller镜像地址

repository: pollyduan/ingress-nginx-controller

# 注释掉 digest

# digest: sha256:9bba603b99bf25f6d117cf1235b6598c16033ad027b143c90fa5b3cc583c5713

# dnsPolicy

dnsPolicy: ClusterFirstWithHostNet

# 使用hostNetwork,即使用宿主机上的端口80 443

hostNetwork: true

# 使用DaemonSet,将ingress部署在指定节点上

kind: DaemonSet

# 节点选择,将需要部署的节点打上ingress=true的label

nodeSelector:

kubernetes.io/os: linux

ingress: "true"

# 修改type,改为ClusterIP。如果在云环境,有loadbanace可以使用loadbanace

type: ClusterIP

- 安装ingress

# 选择节点打label

kubectl label node k8s-master01 ingress=true

kubectl label node k8s-master02 ingress=true

kubectl label node k8s-master03 ingress=true

# 安装ingress

# helm install ingress-nginx ./

NAME: ingress-nginx

LAST DEPLOYED: Thu Jan 28 23:00:09 2021

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export POD_NAME=$(kubectl --namespace default get pods -o jsonpath="{.items[0].metadata.name}" -l "app=ingress-nginx,component=controller,release=ingress-nginx")

kubectl --namespace default port-forward $POD_NAME 8080:80

echo "Visit http://127.0.0.1:8080 to access your application."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

- 确认nginx- ingress 安装是否 OK。

kubectl get all

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-controller-4ccfz 1/1 Running 0 10h

pod/ingress-nginx-controller-fjqgw 1/1 Running 0 10h

pod/ingress-nginx-controller-xqcx7 1/1 Running 0 10h

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller ClusterIP 10.105.250.15 <none> 80/TCP,443/TCP 10h

service/ingress-nginx-controller-admission ClusterIP 10.101.89.154 <none> 443/TCP 10h

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 14h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ingress-nginx-controller 3 3 3 3 3 ingress=true,kubernetes.io/os=linux 10h

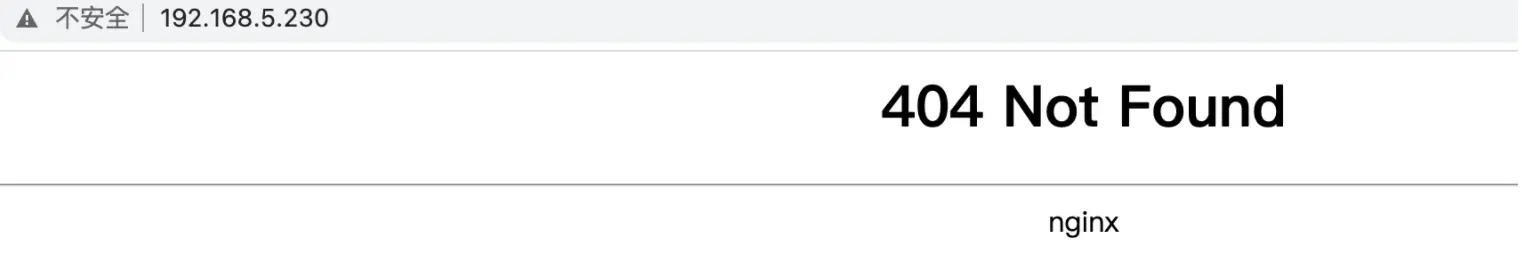

用浏览器访问 http://192.168.5.230/,界面如下:

安装 kubernetes-dashboard

- 下载kubernetes-dashboard

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

"kubernetes-dashboard" has been added to your repositories

helm pull kubernetes-dashboard/kubernetes-dashboard

tar -zxf kubernetes-dashboard-4.0.0.tgz && cd kubernetes-dashboard

- 修改values.yaml

ingress:

# 启用ingress

enabled: true

# 使用指定域名访问

hosts:

- kdb.k8s.com

- 安装kubernetes-dashboard

# 安装kubernetes-dashboard

helm install kubernetes-dashboard ./ -n kube-system

NAME: kubernetes-dashboard

LAST DEPLOYED: Fri Jan 29 20:21:39 2021

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

*********************************************************************************

*** PLEASE BE PATIENT: kubernetes-dashboard may take a few minutes to install ***

*********************************************************************************

From outside the cluster, the server URL(s) are:

https://kdb.k8s.com

- 确认kubernetes-dashboard 安装是否 OK

kubectl get po -n kube-system |grep kubernetes-dashboard

kubernetes-dashboard-69574fd85c-ngfff 1/1 Running 0 9m33s

kubectl get svc -n kube-system |grep kubernetes-dashboard

kubernetes-dashboard ClusterIP 10.110.87.163 <none> 443/TCP 9m40s

kubectl get ingress -n kube-system |grep kubernetes-dashboard

kubernetes-dashboard <none> kdb.k8s.com 10.107.1.113 80 9m22s

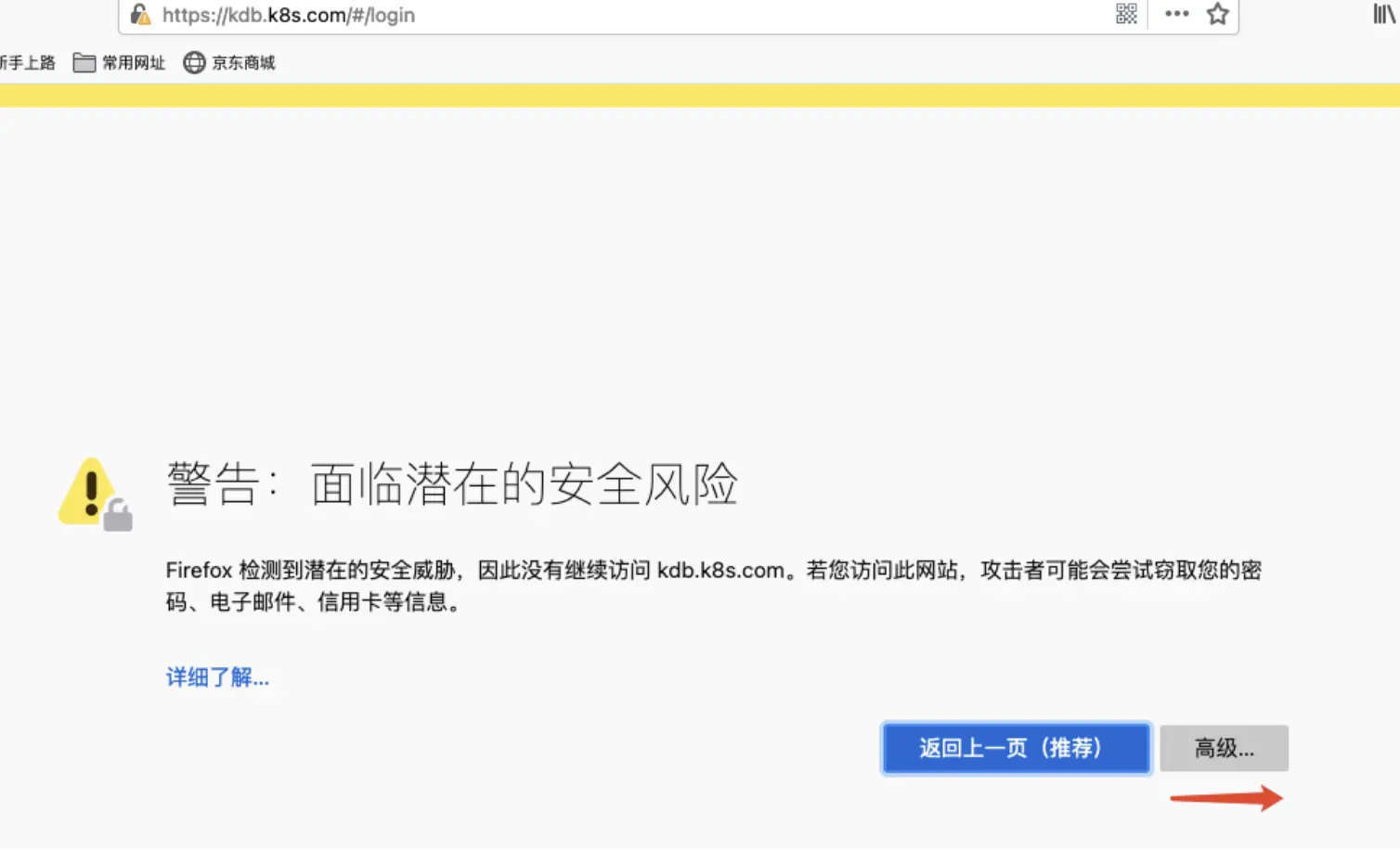

用域名访问https://kdb.k8s.com,显示风险提示页面如下:

点“高级”按钮,页面展示风险原因,并提供了可以接受风险并继续的按钮

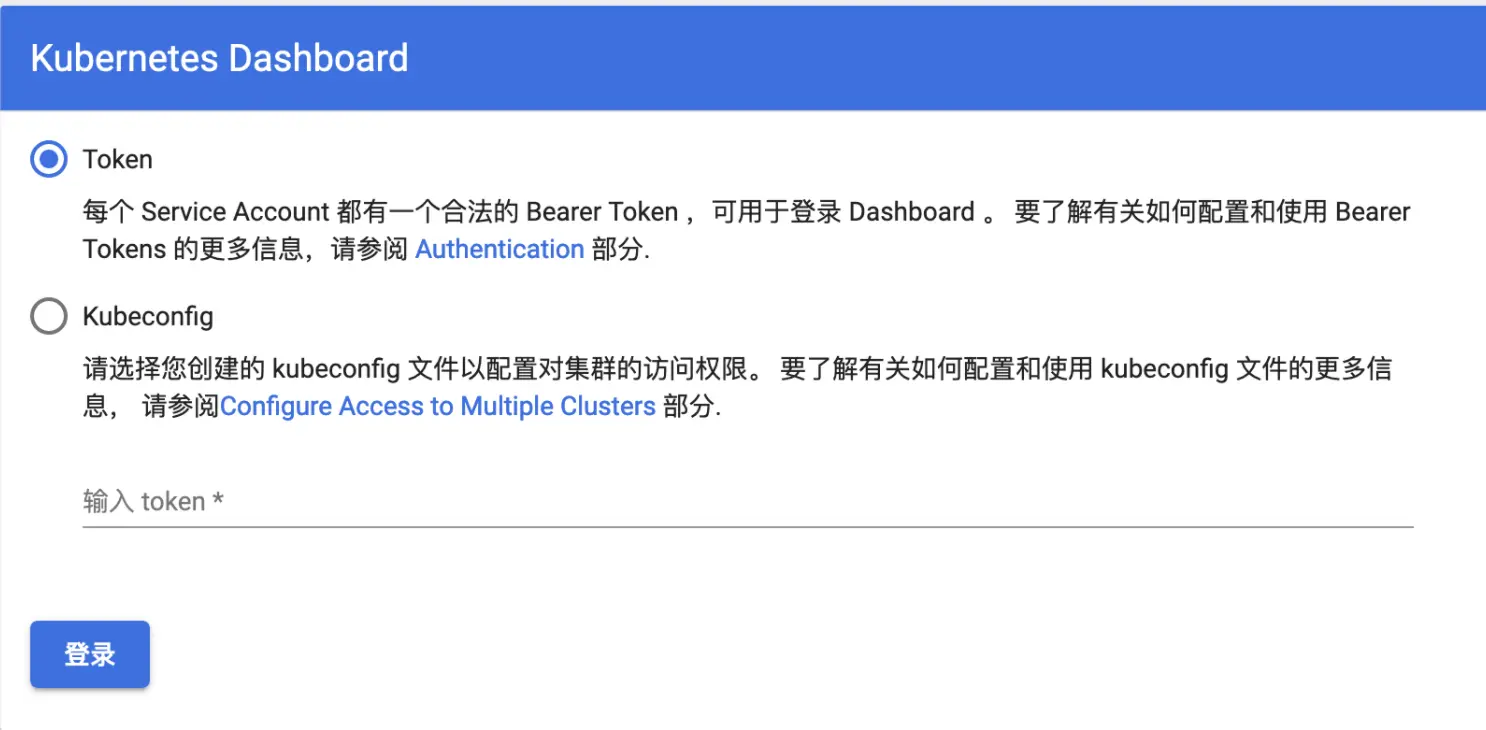

点击“”接受风险并继续”,进入登录页面如下:

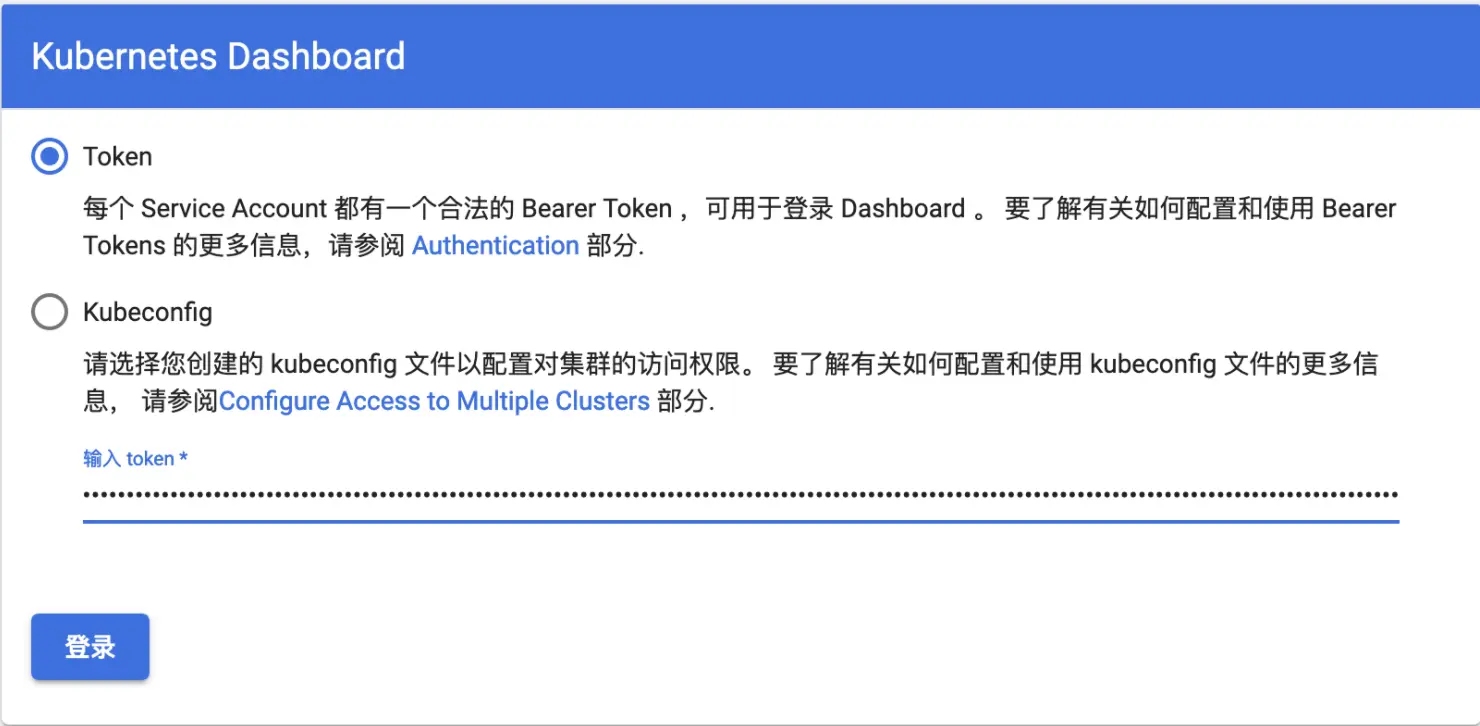

查看token并使用token登陆

# 查看内容含有token的secret

kubectl get secret -n kube-system | grep kubernetes-dashboard-token

kubernetes-dashboard-token-9llx4 kubernetes.io/service-account-token 3 25m

# 查看对应的token

kubectl describe secret -n kube-system kubernetes-dashboard-token-9llx4

Name: kubernetes-dashboard-token-9llx4

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: eb2a13d1-e3cb-47c3-9376-4e7ffba6d5e0

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Ilh1cE93UXFVOGR1ZUs3bjJEVGtZZDJxaUxmVXlyMkYtaEVONUZsN3FqQkUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi05bGx4NCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImViMmExM2QxLWUzY2ItNDdjMy05Mzc2LTRlN2ZmYmE2ZDVlMCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.mx7-LFVA0dn0_hRLxXSstuKTNyTc3klUzdOd3uE5hrEgDjvB7YNC7Y7gP9hBeRU3a9Kh0eG6Z1OfvNDgMUXNxv4Hqi0bqghE9Th4PPGtitDiXlVhtVQSA3eUgbQjNcuYWere30SizEckPRVm-Vpu-31-hEtfClUlnhlbPtEc-lDaOoRncqUpi07uZfT0MCoGzADxUaAJU6v5AUs3iP_0xl1cVqDJtXk-ysabG-KG1y6c8iJiPRUgwi8Uj499dOHjcDcZlJxE3dLgoPvKJ-YWrPUZH_alBIUgj-o2XdgPiY4od3xFnpLDpbmrmyYbDmqT_zyzvoNVfp2WtjzoZi1fwg

使用TOken方式登录,填入token,点登录按钮后进入dashboard如下

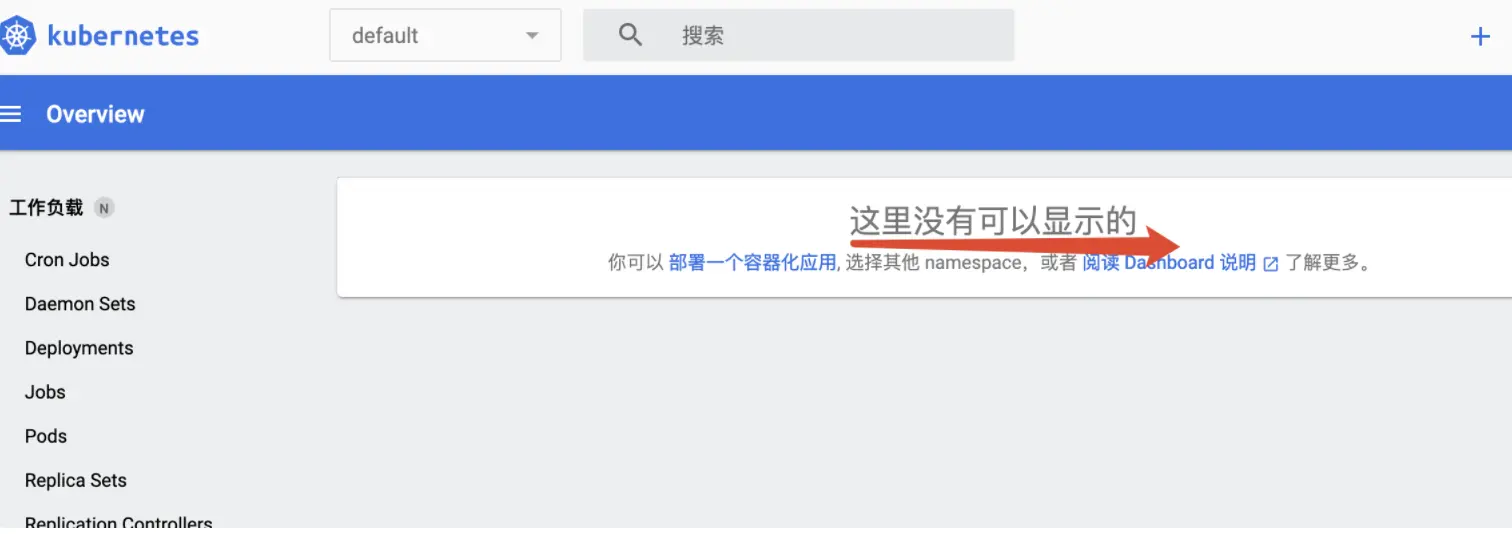

可以看到这里显示“这里没有可以显示的”,是因为dashboard默认的serviceaccount并没有权限,所以我们需要给予它授权。

创建dashboard-admin.yaml,内容为以下

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

给dashboard的serviceaccont授权

kubectl apply -f dashboard-admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard configured

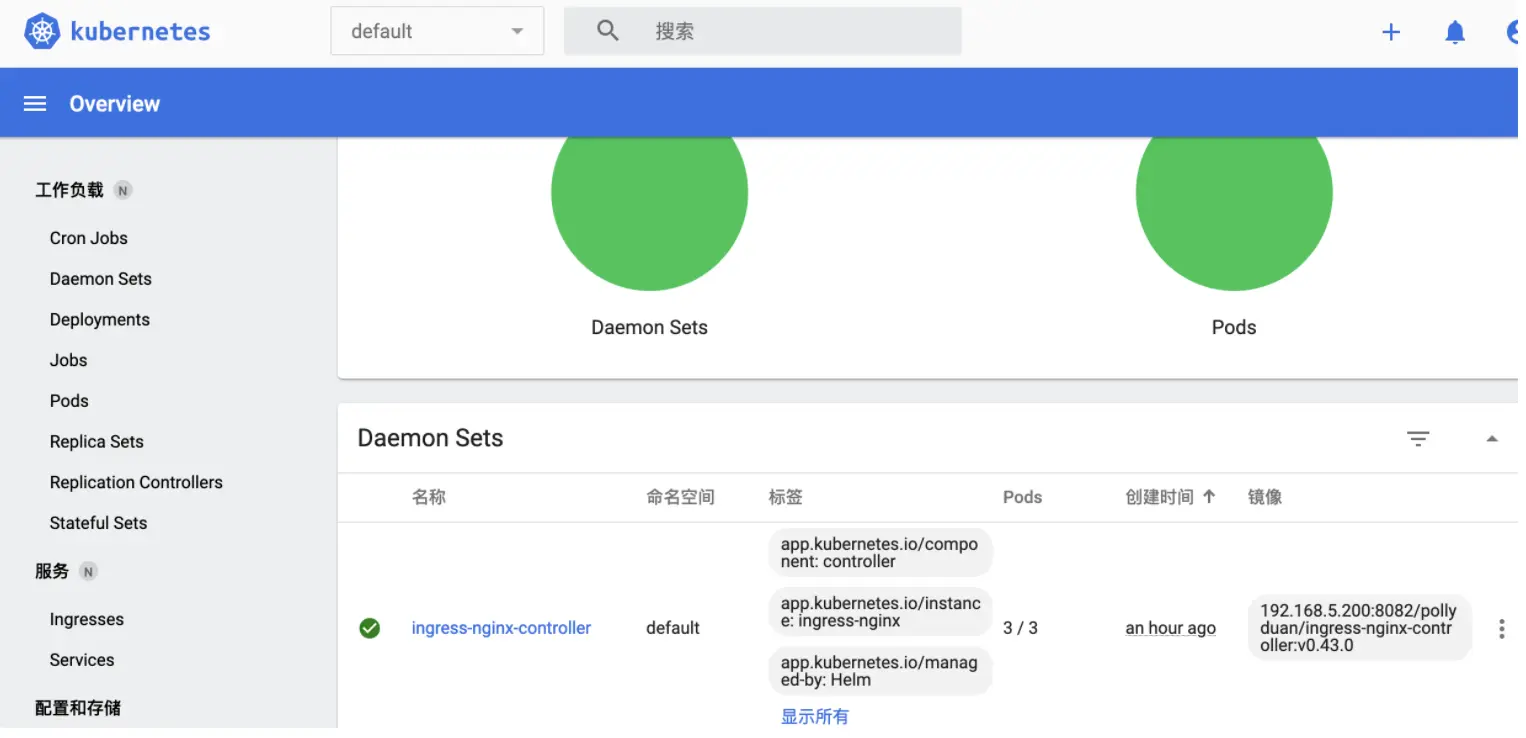

然后刷新dashboard,这时候可以通过dashboard查看如下

安装 local-path

- 下载local-path-provisioner

wget https://github.com/rancher/local-path-provisioner/archive/v0.0.19.tar.gz

tar -zxf v0.0.19.tar.gz && cd local-path-provisioner-0.0.19

- 安装local-path-provisioner

kubectl apply -f deploy/local-path-storage.yaml

kubectl get po -n local-path-storage

NAME READY STATUS RESTARTS AGE

local-path-provisioner-5b577f66ff-q2chs 1/1 Running 0 24m

[root@k8s-master01 deploy]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path rancher.io/local-path Delete WaitForFirstConsumer false 24m

- 配置 local-path 为默认存储

kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/local-path patched

kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.beta.kubernetes.io/is-default-class":"true"}}}'

storageclass.storage.k8s.io/local-path patched

[root@k8s-master01 deploy]# kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 27m 25m

- 验证local-path安装是否OK

kubectl apply -f examples/pod.yaml,examples/pvc.yaml

pod/volume-test created

persistentvolumeclaim/local-path-pvc created

kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/local-path-pvc Bound pvc-74935037-f8c7-4f1a-ab2d-f7670f8ff540 2Gi RWO local-path 58s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-74935037-f8c7-4f1a-ab2d-f7670f8ff540 2Gi RWO Delete Bound default/local-path-pvc local-path 37s

评论区