k8s集群上的监控首推prometheus,但如果按照x86架构k8s集群安装prometheus的方法直接在树莓派k8s集群上安装prometheus,适配的工作量比较大,不建议这么做。我推荐github上一个大神的作品https://github.com/carlosedp/cluster-monitoring, 经反复验证,在树莓派k8s集群基本可用,这里我简单介绍一下安装过程。

安装准备

- 树莓派k8s集群:最好3节点,单节点也可以。

root@pi4-master01:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

pi4-master01 Ready master 4d18h v1.15.10 192.168.5.18 <none> Ubuntu 20.04 LTS 5.4.0-1011-raspi docker://18.9.9

pi4-node01 Ready node 4d17h v1.15.10 192.168.5.19 <none> Ubuntu 20.04 LTS 5.4.0-1011-raspi docker://18.9.9

pi4-node02 Ready node 4d17h v1.15.10 192.168.5.20 <none> Ubuntu 20.04 LTS 5.4.0-1011-raspi docker://18.9.9

- 树莓派k8s集群已安装helm和nginx-ingress

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# helm version

Client: &version.Version{SemVer:"v2.15.0", GitCommit:"c2440264ca6c078a06e088a838b0476d2fc14750", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.15.0+unreleased", GitCommit:"9668ad4d90c5e95bd520e58e7387607be6b63bb6", GitTreeState:"dirty"}

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# helm list

NAME REVISION UPDATED STATUS CHART APP VERSION NAMESPACE

nginx-ingress 1 Fri Jul 3 17:11:20 2020 DEPLOYED nginx-ingress-0.9.5 0.10.2 default

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4d21h

nginx-ingress-controller NodePort 10.110.89.242 192.168.5.18 80:12001/TCP,443:12002/TCP 4d16h

nginx-ingress-default-backend ClusterIP 10.104.65.1 <none> 80/TCP 4d16h

- 树莓派k8s集群已安装存储类,并设置为默认存储

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl get storageclass

NAME PROVISIONER AGE

local-path (default) rancher.io/local-path 4d15h

对了,如果你还没有树莓派k8s集群,这里有一篇树莓派 k8s 集群入坑指南可以参考,欢迎入坑。

- 监控prometheus安装文件:https://github.com/carlosedp/cluster-monitoring

我们选择v0.37.0进行安装,以下操作步骤需登录master节点pi4-master01操作执行。

下载安装文件并解压

root@pi4-master01:~/k8s# wget https://github.com/carlosedp/cluster-monitoring/archive/v0.37.0.tar.gz

root@pi4-master01:~/k8s# tar -zxvf v0.37.0.tar.gz

root@pi4-master01:~/k8s# cd cluster-monitoring-0.37.0/

编译准备

使用make vendor安装编译工具,首次执行会遇到一些坑,按照提示处理即可。

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# make vendor

Command 'make' not found, but can be installed with:

apt install make # version 4.2.1-1.2, or

apt install make-guile # version 4.2.1-1.2

# 需要安装make

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# apt install make

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# make vendor

make:go:命令未找到

make:go:命令未找到

make:go:命令未找到

Installing jsonnet-bundler

make:go:命令未找到

make: *** [Makefile:49:/bin/jb] 错误 127

# 需要安装go

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# apt install -y golang

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# make vendor

Installing jsonnet-bundler

root@wb-predict:~/monitoring/cluster-monitoring-0.37.0# make vendor

Installing jsonnet-bundler

rm -rf vendor

/root/go/bin/jb install

GET https://github.com/coreos/kube-prometheus/archive/285624d8fbef01923f7b9772fe2da21c5698a666.tar.gz 200

GET https://github.com/ksonnet/ksonnet-lib/archive/0d2f82676817bbf9e4acf6495b2090205f323b9f.tar.gz 200

GET https://github.com/brancz/kubernetes-grafana/archive/57b4365eacda291b82e0d55ba7eec573a8198dda.tar.gz 200

GET https://github.com/kubernetes/kube-state-metrics/archive/c485728b2e585bd1079e12e462cd7c6fef25f155.tar.gz 200

GET https://github.com/coreos/prometheus-operator/archive/59bdf55453ba08b4ed7c271cb3c6627058945ed5.tar.gz 200

GET https://github.com/coreos/etcd/archive/07a74d61cb6c07965c5b594748dc999d1644862b.tar.gz 200

GET https://github.com/prometheus/prometheus/archive/012161d90d6a8a6bb930b90601fb89ff6cc3ae60.tar.gz 200

GET https://github.com/kubernetes-monitoring/kubernetes-mixin/archive/ea905d25c01ff4364937a2faed248e5f2f3fdb35.tar.gz 200

GET https://github.com/prometheus/node_exporter/archive/0107bc794204f50d887898da60032da890637471.tar.gz 200

GET https://github.com/kubernetes/kube-state-metrics/archive/c485728b2e585bd1079e12e462cd7c6fef25f155.tar.gz 200

GET https://github.com/grafana/jsonnet-libs/archive/03da9ea0fc25e621d195fbb218a6bf8593152721.tar.gz 200

GET https://github.com/grafana/grafonnet-lib/archive/7a932c9cfc6ccdb1efca9535f165e055949be42a.tar.gz 200

GET https://github.com/metalmatze/slo-libsonnet/archive/5ddd7ffc39e7a54c9aca997c2c389a8046fab0ff.tar.gz 200

编译

使用make编译

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# make

Installing jsonnet

rm -rf manifests

./scripts/build.sh main.jsonnet /root/go/bin/jsonnet

using jsonnet from arg

+ set -o pipefail

+ rm -rf manifests

+ mkdir -p manifests/setup

+ /root/go/bin/jsonnet -J vendor -m manifests main.jsonnet

+ xargs '-I{}' sh -c 'cat {} | $(go env GOPATH)/bin/gojsontoyaml > {}.yaml; rm -f {}' -- '{}'

安装

先使用kubectl apply -f manifests/setup/安装crd,再使用kubectl apply -f manifests安装prometheus

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl apply -f manifests/setup/

namespace/monitoring created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

service/prometheus-operator created

serviceaccount/prometheus-operator created

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl apply -f manifests

alertmanager.monitoring.coreos.com/main created

secret/alertmanager-main created

service/alertmanager-main created

serviceaccount/alertmanager-main created

servicemonitor.monitoring.coreos.com/alertmanager created

secret/grafana-config created

secret/grafana-datasources created

configmap/grafana-dashboard-apiserver created

configmap/grafana-dashboard-cluster-total created

configmap/grafana-dashboard-controller-manager created

configmap/grafana-dashboard-coredns-dashboard created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-node created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-k8s-resources-workload created

configmap/grafana-dashboard-k8s-resources-workloads-namespace created

configmap/grafana-dashboard-kubelet created

configmap/grafana-dashboard-kubernetes-cluster-dashboard created

configmap/grafana-dashboard-namespace-by-pod created

configmap/grafana-dashboard-namespace-by-workload created

configmap/grafana-dashboard-node-cluster-rsrc-use created

configmap/grafana-dashboard-node-rsrc-use created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-persistentvolumesusage created

configmap/grafana-dashboard-pod-total created

configmap/grafana-dashboard-prometheus-dashboard created

configmap/grafana-dashboard-prometheus-remote-write created

configmap/grafana-dashboard-prometheus created

configmap/grafana-dashboard-proxy created

configmap/grafana-dashboard-scheduler created

configmap/grafana-dashboard-statefulset created

configmap/grafana-dashboard-workload-total created

configmap/grafana-dashboards created

deployment.apps/grafana created

service/grafana created

serviceaccount/grafana created

servicemonitor.monitoring.coreos.com/grafana created

ingress.extensions/alertmanager-main created

ingress.extensions/grafana created

ingress.extensions/prometheus-k8s created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

deployment.apps/kube-state-metrics created

service/kube-state-metrics created

serviceaccount/kube-state-metrics created

servicemonitor.monitoring.coreos.com/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/node-exporter created

clusterrolebinding.rbac.authorization.k8s.io/node-exporter created

daemonset.apps/node-exporter created

service/node-exporter created

serviceaccount/node-exporter created

servicemonitor.monitoring.coreos.com/node-exporter created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter created

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator created

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources created

configmap/adapter-config created

deployment.apps/prometheus-adapter created

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader created

service/prometheus-adapter created

serviceaccount/prometheus-adapter created

clusterrole.rbac.authorization.k8s.io/prometheus-k8s created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s created

service/kube-controller-manager-prometheus-discovery created

service/kube-dns-prometheus-discovery created

service/kube-scheduler-prometheus-discovery created

servicemonitor.monitoring.coreos.com/prometheus-operator created

prometheus.monitoring.coreos.com/k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

rolebinding.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s-config created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

role.rbac.authorization.k8s.io/prometheus-k8s created

prometheusrule.monitoring.coreos.com/prometheus-k8s-rules created

service/prometheus-k8s created

serviceaccount/prometheus-k8s created

servicemonitor.monitoring.coreos.com/prometheus created

servicemonitor.monitoring.coreos.com/kube-apiserver created

servicemonitor.monitoring.coreos.com/coredns created

servicemonitor.monitoring.coreos.com/kube-controller-manager created

servicemonitor.monitoring.coreos.com/kube-scheduler created

servicemonitor.monitoring.coreos.com/kubelet created

确认安装状态

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl get po -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 118m

grafana-77f9bbbc9-826ht 1/1 Running 0 118m

kube-state-metrics-6d766b45f4-7mjxw 3/3 Running 0 118m

node-exporter-7zskm 2/2 Running 0 118m

node-exporter-njl6z 2/2 Running 0 118m

node-exporter-scdpn 2/2 Running 0 118m

prometheus-adapter-799fc6fd45-jnldr 1/1 Running 0 118m

prometheus-k8s-0 3/3 Running 1 118m

prometheus-operator-7d578bdb5b-ctq9v 1/1 Running 0 119m

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.109.71.208 <none> 9093/TCP 119m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 118m

grafana ClusterIP 10.96.152.138 <none> 3000/TCP 118m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 118m

node-exporter ClusterIP None <none> 9100/TCP 118m

prometheus-adapter ClusterIP 10.107.174.201 <none> 443/TCP 118m

prometheus-k8s ClusterIP 10.106.248.61 <none> 9090/TCP 118m

prometheus-operated ClusterIP None <none> 9090/TCP 118m

prometheus-operator ClusterIP None <none> 8080/TCP 119m

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl get ingress -n monitoring

NAME HOSTS ADDRESS PORTS AGE

alertmanager-main alertmanager.192.168.15.15.nip.io 80, 443 119m

grafana grafana.192.168.15.15.nip.io 80, 443 119m

prometheus-k8s prometheus.192.168.15.15.nip.io 80, 443 119m

访问prometheus相关系统

-

ip域名映射处理

在访问prometheus、grafana和alertmanage系统之前,我们需要在使用客户端访问电脑建立ip和相关域名的映射关系如下:

192.168.5.18 alertmanager.192.168.15.15.nip.io grafana.192.168.15.15.nip.io prometheus.192.168.15.15.nip.io

提示:win10或win7电脑中维护ip和相关域名的文件位置在 C:\Windows\System32\drivers\etc\hosts。 -

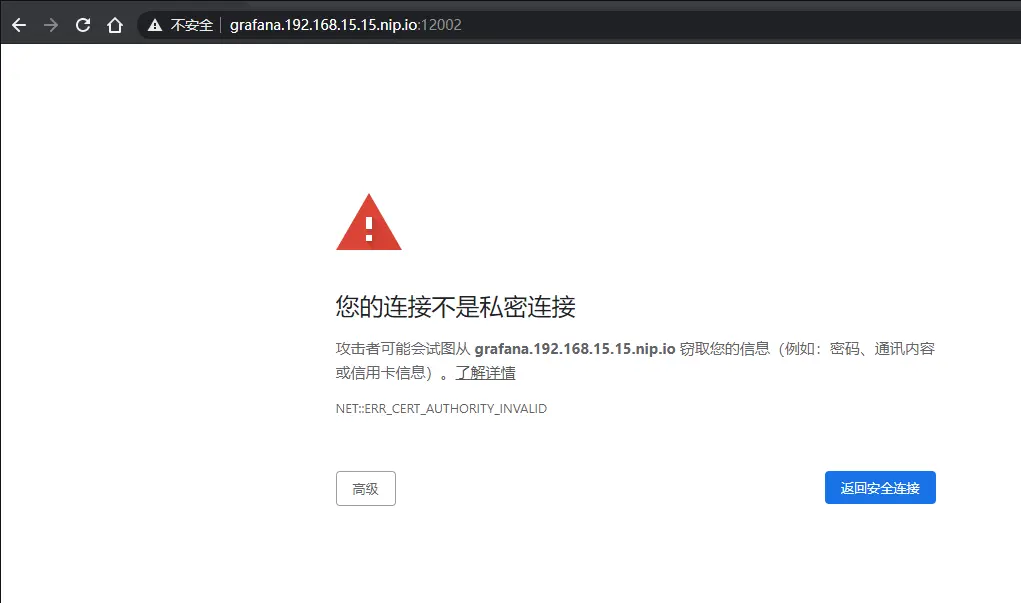

安全风险处理

因为默认安装的prometheus、grafana和alertmanage系统只能通过https访问,且默认的服务器证书是自签证书,用浏览器首次访问会报安全风险,我们可以选择忽略,直接访问即可。

以用chrome浏览器首次访问grafana系统为例

点按钮“高级”,高级按钮变“隐藏详情”,且按钮下方提示“继续前往grafana.192.168.15.15.nip.io(不安全)”

该提示是个链接,可以直接点击,显示grafana登录页面。

访问prometheus

默认安装需要使用https访问 https://prometheus.192.168.15.15.nip.io:12002

访问grafana

默认安装需要使用https访问 [https://grafana.192.168.15.15.nip.io:12002](https://grafana.192.168.15.15.nip.io

:12002)

默认用户名和密码都是admin,首次登录成功后需要修改密码才能用

修改密码后,就可以进入grafana首页了

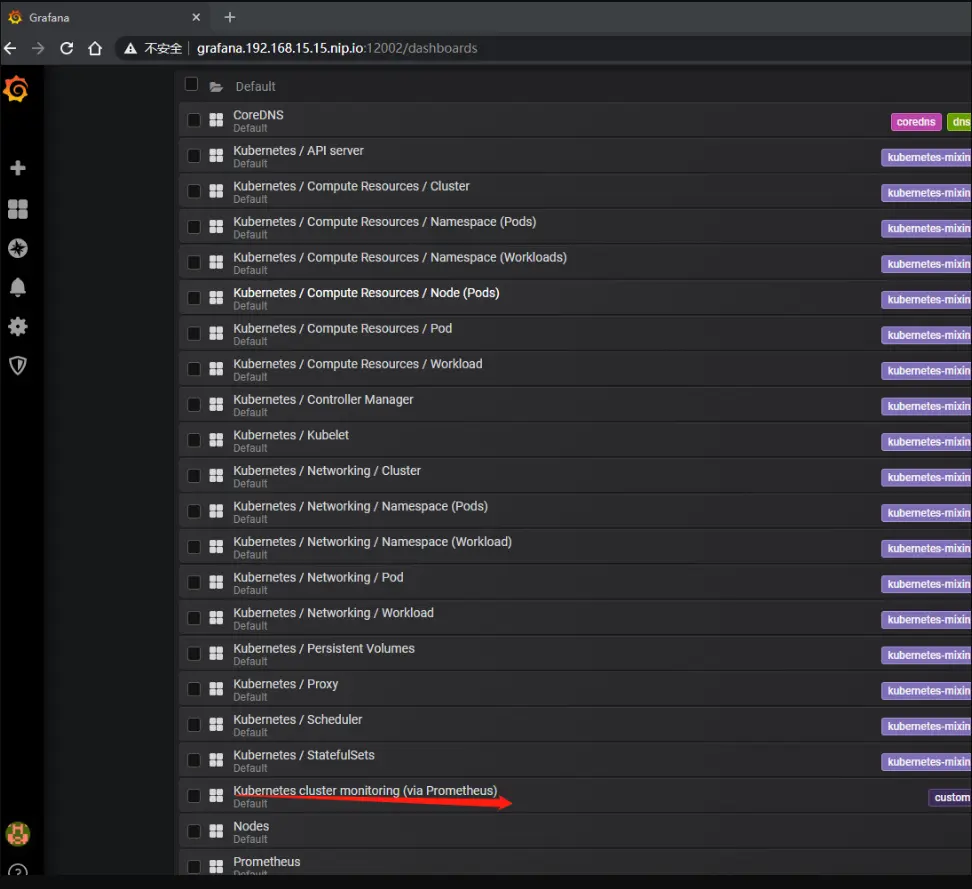

从首页我们点击左边栏的Dashboards按钮,显示界面如下

点开上图“Default”文件夹,我们可以看到已经内置了很多面板如下,感兴趣的可以点开看看

打开面板Kubernetes cluster monitoring (via Prometheus), 可以全面监控树莓派k8s集群的当前状态如下

访问alertmanager

默认安装需要使用https访问[https://alertmanager.192.168.15 .15.nip.io:12002](https://alertmanager.192.168.15 .15.nip.io:12002)

其它

到这里,我们的监控prometheus系统就算是部署成功了,当然,这个监控目前只是可用而已,我们可以让它变得更好用一些,下面介绍这个开源项目可以改造的几点,供各位同学参考。

增加对温度的监控

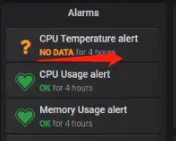

大家可以回顾下grafana系统下Kubernetes cluster monitoring (via Prometheus)面板的这个位置,

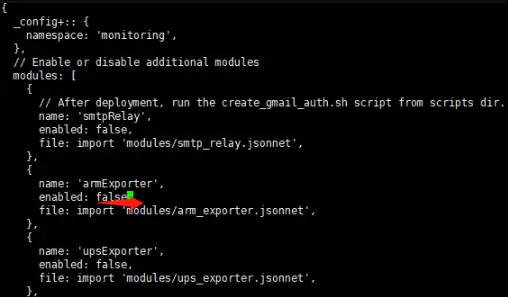

默认情况下,是没有温度监控的,我们需要额外处理下,这个需要修改项目下的vars.jsonnet。

找到armExporter下对应的enabled,由false修改为true后,再重新make和kubectl apply -f manifests即可。

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# make

rm -rf manifests

./scripts/build.sh main.jsonnet /root/go/bin/jsonnet

using jsonnet from arg

+ set -o pipefail

+ rm -rf manifests

+ mkdir -p manifests/setup

+ /root/go/bin/jsonnet -J vendor -m manifests main.jsonnet

+ xargs '-I{}' sh -c 'cat {} | $(go env GOPATH)/bin/gojsontoyaml > {}.yaml; rm -f {}' -- '{}'

root@pi4-master01:~/k8s/cluster-monitoring-0.37.0# kubectl apply -f manifests

alertmanager.monitoring.coreos.com/main unchanged

secret/alertmanager-main configured

service/alertmanager-main unchanged

serviceaccount/alertmanager-main unchanged

servicemonitor.monitoring.coreos.com/alertmanager unchanged

clusterrole.rbac.authorization.k8s.io/arm-exporter created

clusterrolebinding.rbac.authorization.k8s.io/arm-exporter created

daemonset.apps/arm-exporter created

service/arm-exporter created

serviceaccount/arm-exporter created

servicemonitor.monitoring.coreos.com/arm-exporter created

secret/grafana-config unchanged

secret/grafana-datasources unchanged

configmap/grafana-dashboard-apiserver unchanged

configmap/grafana-dashboard-cluster-total unchanged

configmap/grafana-dashboard-controller-manager unchanged

configmap/grafana-dashboard-coredns-dashboard unchanged

configmap/grafana-dashboard-k8s-resources-cluster unchanged

configmap/grafana-dashboard-k8s-resources-namespace unchanged

configmap/grafana-dashboard-k8s-resources-node unchanged

configmap/grafana-dashboard-k8s-resources-pod unchanged

configmap/grafana-dashboard-k8s-resources-workload unchanged

configmap/grafana-dashboard-k8s-resources-workloads-namespace unchanged

configmap/grafana-dashboard-kubelet unchanged

configmap/grafana-dashboard-kubernetes-cluster-dashboard unchanged

configmap/grafana-dashboard-namespace-by-pod unchanged

configmap/grafana-dashboard-namespace-by-workload unchanged

configmap/grafana-dashboard-node-cluster-rsrc-use unchanged

configmap/grafana-dashboard-node-rsrc-use unchanged

configmap/grafana-dashboard-nodes unchanged

configmap/grafana-dashboard-persistentvolumesusage unchanged

configmap/grafana-dashboard-pod-total unchanged

configmap/grafana-dashboard-prometheus-dashboard unchanged

configmap/grafana-dashboard-prometheus-remote-write unchanged

configmap/grafana-dashboard-prometheus unchanged

configmap/grafana-dashboard-proxy unchanged

configmap/grafana-dashboard-scheduler unchanged

configmap/grafana-dashboard-statefulset unchanged

configmap/grafana-dashboard-workload-total unchanged

configmap/grafana-dashboards unchanged

deployment.apps/grafana configured

service/grafana unchanged

serviceaccount/grafana unchanged

servicemonitor.monitoring.coreos.com/grafana unchanged

ingress.extensions/alertmanager-main unchanged

ingress.extensions/grafana unchanged

ingress.extensions/prometheus-k8s unchanged

clusterrole.rbac.authorization.k8s.io/kube-state-metrics unchanged

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics unchanged

deployment.apps/kube-state-metrics unchanged

service/kube-state-metrics unchanged

serviceaccount/kube-state-metrics unchanged

servicemonitor.monitoring.coreos.com/kube-state-metrics unchanged

clusterrole.rbac.authorization.k8s.io/node-exporter unchanged

clusterrolebinding.rbac.authorization.k8s.io/node-exporter unchanged

daemonset.apps/node-exporter configured

service/node-exporter unchanged

serviceaccount/node-exporter unchanged

servicemonitor.monitoring.coreos.com/node-exporter unchanged

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-adapter unchanged

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-adapter unchanged

clusterrolebinding.rbac.authorization.k8s.io/resource-metrics:system:auth-delegator unchanged

clusterrole.rbac.authorization.k8s.io/resource-metrics-server-resources unchanged

configmap/adapter-config unchanged

deployment.apps/prometheus-adapter configured

rolebinding.rbac.authorization.k8s.io/resource-metrics-auth-reader unchanged

service/prometheus-adapter unchanged

serviceaccount/prometheus-adapter unchanged

clusterrole.rbac.authorization.k8s.io/prometheus-k8s unchanged

clusterrolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

service/kube-controller-manager-prometheus-discovery unchanged

service/kube-dns-prometheus-discovery unchanged

service/kube-scheduler-prometheus-discovery unchanged

servicemonitor.monitoring.coreos.com/prometheus-operator unchanged

prometheus.monitoring.coreos.com/k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s-config unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

rolebinding.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s-config unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

role.rbac.authorization.k8s.io/prometheus-k8s unchanged

prometheusrule.monitoring.coreos.com/prometheus-k8s-rules unchanged

service/prometheus-k8s unchanged

serviceaccount/prometheus-k8s unchanged

servicemonitor.monitoring.coreos.com/prometheus unchanged

servicemonitor.monitoring.coreos.com/kube-apiserver unchanged

servicemonitor.monitoring.coreos.com/coredns unchanged

servicemonitor.monitoring.coreos.com/kube-controller-manager unchanged

servicemonitor.monitoring.coreos.com/kube-scheduler unchanged

servicemonitor.monitoring.coreos.com/kubelet unchanged

过5分钟,再看下Kubernetes cluster monitoring (via Prometheus)面板的这个位置,我们就会发现温度数据已经开始采集了。

将suffixDomain对应的value改成我们希望的域名,比如pi4k8s.com,再重新make和kubectl apply -f manifests即可。执行完后,再看效果,域名已经变更过来了。

root@pi4-master01:~# kubectl get ingress -n monitoring

NAME HOSTS ADDRESS PORTS AGE

alertmanager-main alertmanager.pi4k8s.com 80, 443 5h36m

grafana grafana.pi4k8s.com 80, 443 5h36m

prometheus-k8s prometheus.pi4k8s.com 80, 443 5h36m

由https切换成http访问

默认情况下,prometheus、grafana和alertmanage系统只能支持https访问,这个也可以切换成http访问,方法同样也是修改项目下的vars.jsonnet。

将TLSingress对应的value修改成false,再重新make和kubectl apply -f manifests即可。执行完后,再看效果,443端口的ingress已经没有了。

root@pi4-master01:~# kubectl get ingress -n monitoring

NAME HOSTS ADDRESS PORTS AGE

alertmanager-main alertmanager.pi4k8s.com 80 5h51m

grafana grafana.pi4k8s.com 80 5h51m

prometheus-k8s prometheus.pi4k8s.com 80 5h51m

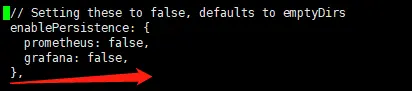

支持存储

默认情况下,prometheus、grafana系统并不支持存储,pod一旦重建,相关用户信息、面板和监控数据就都没有了,如果需要支持存储的话,方法同样也是修改项目下的vars.jsonnet。

将enablePersistence下的prometheus和grafana对应的value都改成true,然后再重新make和kubectl apply -f manifests即可。执行完后,再看效果,新增了相关pvc和pv。

root@pi4-master01:~# kubectl get pv,pvc -n monitoring

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-4a17517f-f063-436c-94a4-01c35f925353 2Gi RWO Delete Bound monitoring/grafana-storage local-path 7s

persistentvolume/pvc-ea070bfd-d7f7-4692-b669-fea32fd17698 20Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-0 local-path 11s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana-storage Bound pvc-4a17517f-f063-436c-94a4-01c35f925353 2Gi RWO local-path 27s

persistentvolumeclaim/prometheus-k8s-db-prometheus-k8s-0 Bound pvc-ea070bfd-d7f7-4692-b669-fea32fd17698 20Gi RWO local-path 15s

总结

k8s集群部署了prometheus监控,就像给我们开发者安装了火眼金睛,从此k8s集群所有的服务器节点状态、所有的CPU、内存、存储资源、网络使用情况全都可以一览无遗;另外,我们除了可以实时监控所有服务器节点运行的pod、job状态,还能够基于prometheus进行扩展,实现更多的监控场景。

如何正确使用树莓派k8s集群,让我们先从安装监控系统prometheus开始。

评论区